Mobile gaming has always been a balancing act, as developers have to juggle sharp visuals, smooth performance and battery life, often making compromises to keep everything in check on the limited hardware of smartphones. Arm, a company whose chip designs power most of the world’s mobile devices, is changing that equation with Neural Super Sampling (NSS), paired with dedicated neural accelerators in future Arm GPUs.

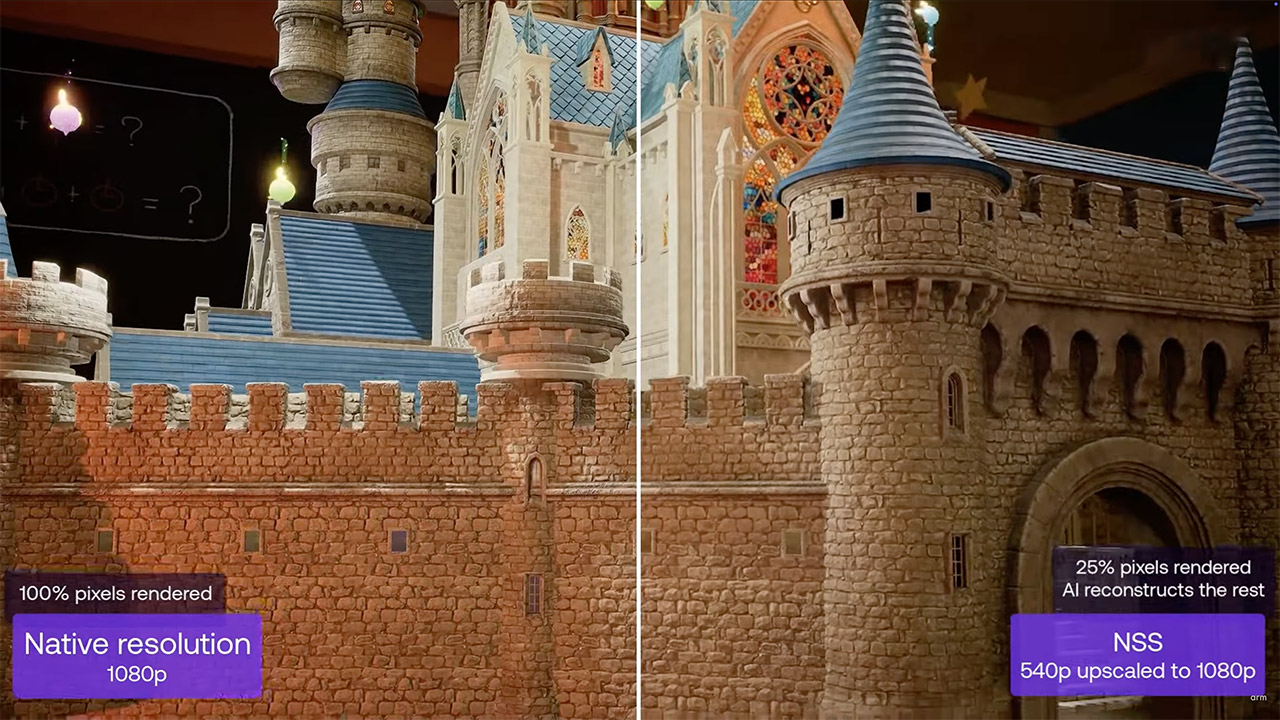

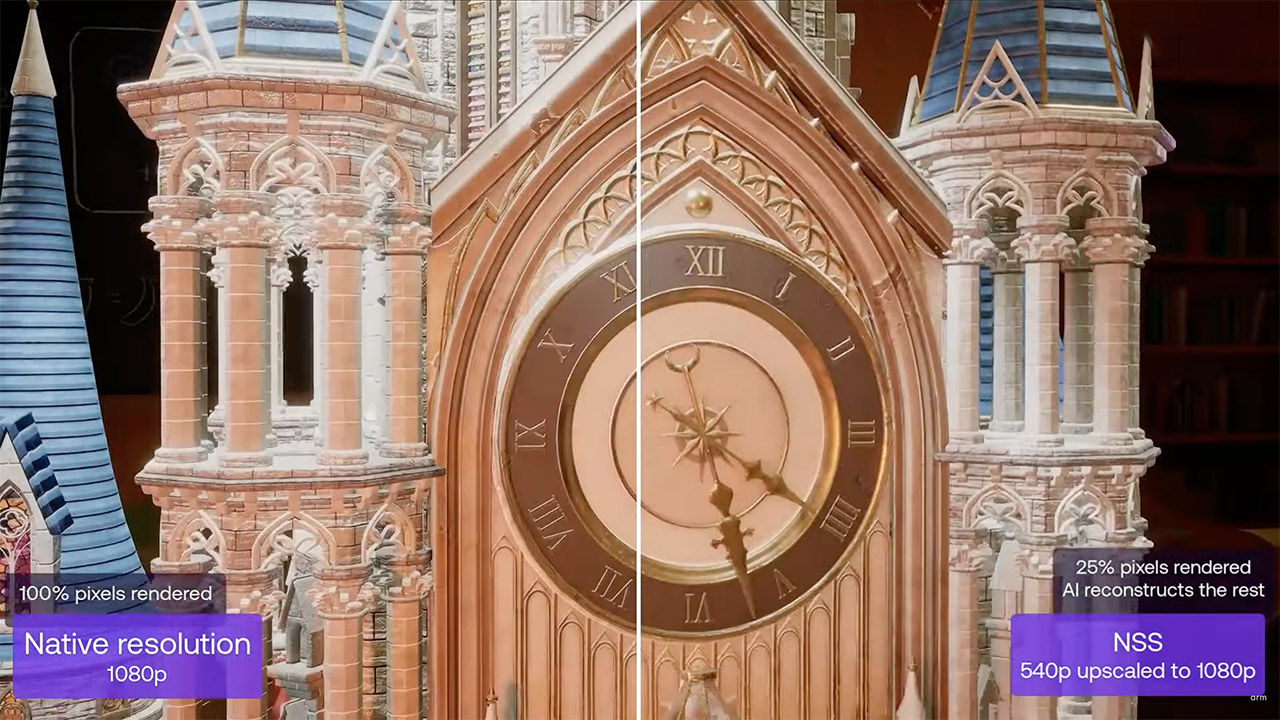

Arm’s Neural Super Sampling is a new way to make games look better on your phone. Instead of rendering every frame at high resolution, which taxes the GPU and eats up power, NSS starts with a lower resolution—say 540p—and uses a neural network to upscale it to 1080p. The result? Images that look almost as good as if they were rendered at the higher resolution from the start, but with half the workload on the GPU. In a demo called Enchanted Castle, Arm showed this in action, upscaling a 540p image to 1080p in 4 milliseconds per frame. That’s fast enough to keep games running smoothly while saving up to 50% of the GPU’s effort.

- Google Pixel 9a is engineered by Google with more than you expect, for less than you think; like Gemini, your built-in AI assistant[1], the incredible...

- Take amazing photos and videos with the Pixel Camera, and make them better than you can imagine with Google AI; get great group photos with Add Me and...

- Google Pixel’s Adaptive Battery can last over 30 hours[2]; turn on Extreme Battery Saver and it can last up to 100 hours, so your phone has power...

The secret is in Arm’s new neural accelerators, which are built directly into the GPU’s shader cores. These aren’t just more chips put onto the CPU; they’re designed to scale with the GPU’s power, whether it’s a simple 5 core architecture or a powerful 16 core system. Unlike previous upscaling approaches that rely on hand-tuned algorithms, NSS uses a trained neural network to analyze frames, motion, and depth data, resulting in crisper images with fewer visual faults such as ghosting or flickering.

Developers get a head start with Arm’s Neural Graphics Development Kit, available now, a year before the hardware hits devices. This is a playground for coders, with an Unreal Engine plugin, sample code and open models on GitHub and Hugging Face. There’s also a Vulkan emulation layer so you can test your games on a PC as if they were running on a future Arm GPU. Epic Games, Tencent and NetEase are already testing it out, so there’s strong industry support.

NSS builds on Arm’s earlier work with Accuracy Super Resolution (ASR), a non-AI upscaling method already used in games like Fortnite. NSS takes it further by replacing manual tweaks with machine learning, so images are as good as NVIDIA’s DLSS but optimized for mobile hardware.

The process behind NSS is a three step process, starting with a preprocessing stage gathers data like color, motion vectors and depth, bundling them into a neat package for the neural network. Then the neural accelerator runs its magic and produces a set of parameters that tell each pixel what to look like. Finally a post processing stage applies those parameters to create the final upscaled image using techniques like filtering and sample accumulation to make everything look crisp and consistent. The neural network even keeps track of previous frames using a “hidden state” to maintain smoothness over time which helps avoid visual hiccups.