Think of Meta’s MusicGen AI as ChatGPT for tunes rather than imagery. This single stage, auto-regressive Transformer model was trained over a 32kHz EnCodec tokenizer with 4 codebooks sampled at 50 Hz. Unlike Google’s MusicLM, MusicGen doesn’t require a self-supervised semantic representation, thus it’s capable of generating all four codebooks in one pass.

It was trained on 20,000 hours of licensed music to train. The team relies on an international dataset of 10K high-quality music tracks, as well as on ShutterStock and Pond5 music data. Try it out here now, or download the files.

- Experience total immersion with 3D positional audio, hand tracking and easy-to-use controllers working together to make virtual worlds feel real.

- Explore an expanding universe of over 500 titles across gaming, fitness, social/multiplayer and entertainment, including exclusive releases and...

- Enjoy fast, smooth gameplay and immersive graphics as high-speed action unfolds around you with a fast processor and immersive graphics.

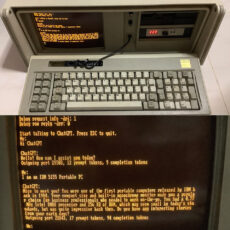

Audiocraft requires Python 3.9, PyTorch 2.0.0, and a GPU with at least 16 GB of memory (for the medium-sized model),’ said Meta.