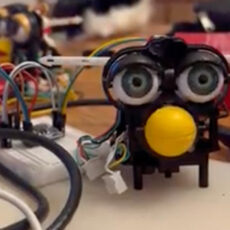

When Boston Dynamics’ Spot robot meets GPT-4 Turbo and Vision AI, you get Hawkeye. This basically means the robot uses speech-to-text to wait for an interaction from the user before determining the type and intention of the request.

Next, the relevant data is fed into OpenAI’s GPT4-Turbo and Vision to generate a chain of command for the robot to follow, with the ability to map out its actions as well as navigate to the goal. Hawkeye then incrementally reevaluates and re-plans its movements to eventually reach its intended goal.

- 【10-Inch 12K Mono LCD】With a 10-inch 12K mono LCD boasting a stunning resolution of 11520x5120 and an XY resolution of 19x24μm, ELEGOO Saturn 3...

- 【Generous Build Volume】With a generous build volume of 218.88x122.88x250 mm³/8.62x4.84x9.84 inches, you can try to print larger models and more...

- 【Fresnel Collimating Light Source】The COB light source and Fresnel collimating lens work together to emit a uniform light beam of 405nm...

Looking ahead, we envision further refining Hawkeye by incorporating dynamic object tracking along with facial recognition tech to improve the breadth of human-assistance tasks. This would allow it to dynamically change its motion to avoid moving obstacles. Spot has huge potential as a guide dog once combined with AI, being able to talk to and guide its owner — with Hawkeye’s interaction software, we believe this level of robotics collaboration will shape the future,” said the researchers.

[Source]