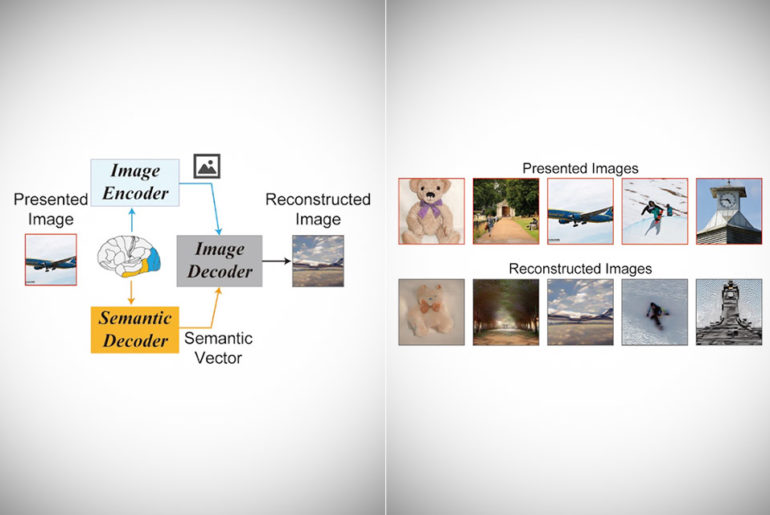

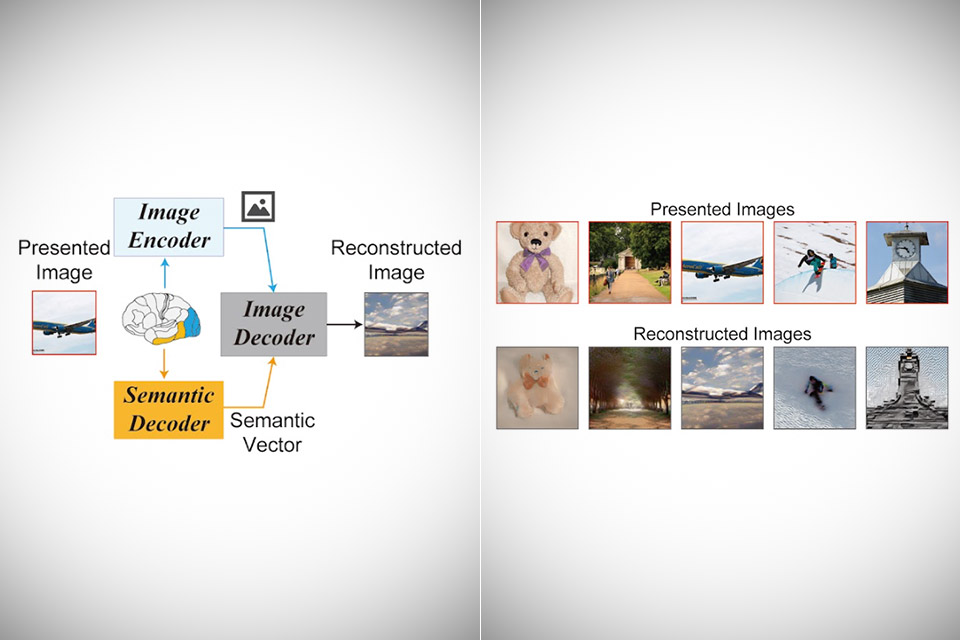

Unlike a brain-computer interface, artificial intelligence can be combined with human brain activity to reconstruct high-resolution images. How so? Researchers from the Graduate School of Frontier Biosciences at Osaka University used a diffusion model (DM) to decode fMRI data from human brains and reconstruct visual experiences, relying on a latent diffusion model (LDM) that many know as Stable Diffusion.

Training or fine-tuning of complex deep generative models is not required, as this simple framework reconstructs high-resolution images from functional Magnetic Resonance Imaging (fMRI) signals using Stable Diffusion. The text-to-image conversion process implemented by Stable Diffusion incorporates the semantic information expressed by the conditional text, while simultaneously retaining the appearance of the original image.

- All-Day Battery Life – Go longer than ever with up to 18 hours of battery life.

- Powerful Performance – Take on everything from professional-quality editing to action-packed gaming with ease. The Apple M1 chip with an 8-core CPU...

- Superfast Memory – 8GB of unified memory makes your entire system speedy and responsive. That way it can support tasks like memory-hogging multitab...

We show that our proposed method can reconstruct high-resolution images with high fidelity in straightforward fashion, without the need for any additional training and fine-tuning of complex deep-learning models. Overall, our study proposes a promising method for reconstructing images from human brain activity, and provides a new framework for understanding DMs,” said the researchers.