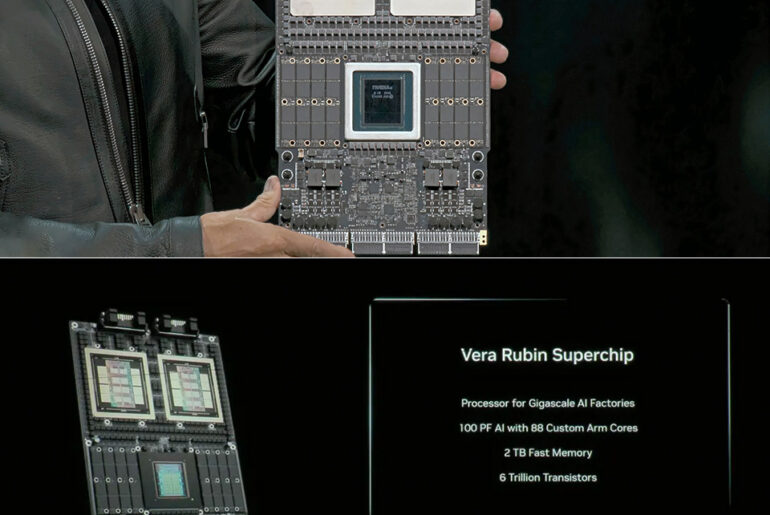

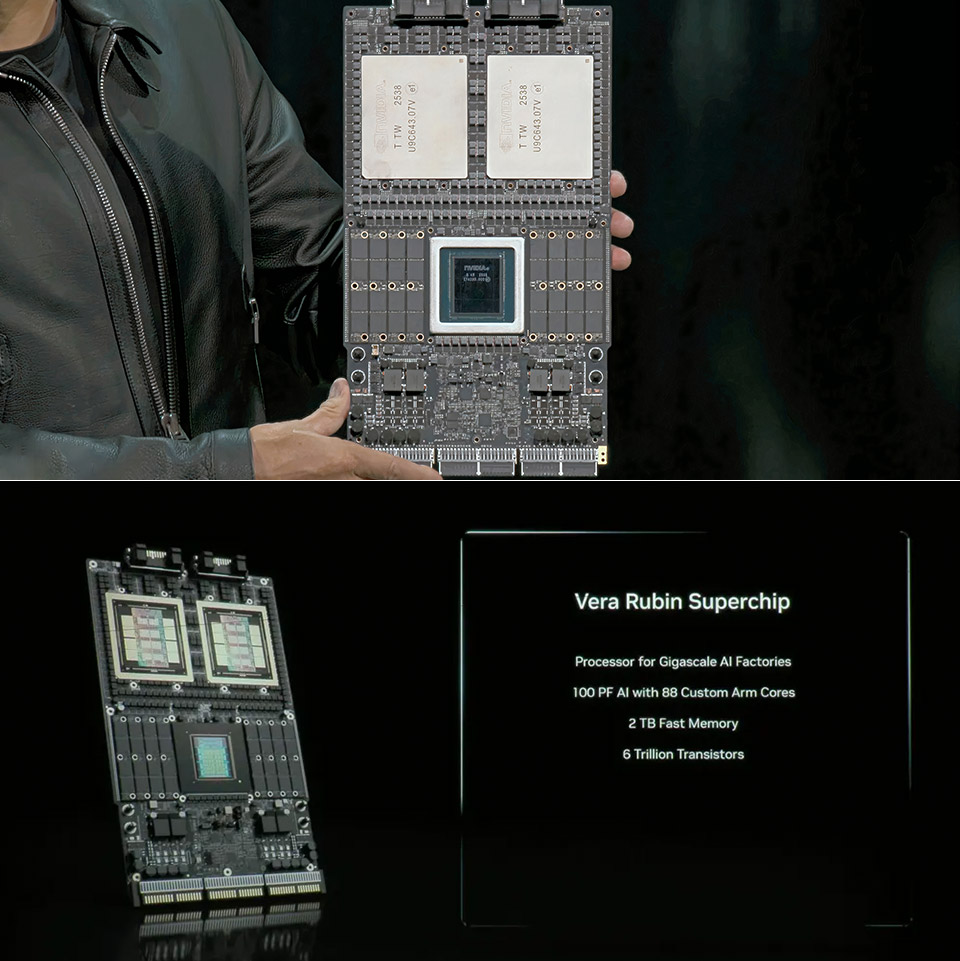

NVIDIA CEO Jensen Huang walked onto the GTC stage in Washington this week holding what looked like a blueprint for the future, not a computer part. This was the Vera Rubin Superchip, a flat expanse of circuitry the size of a large dinner plate with enough oomph to handle the heaviest AI workloads.

Huang called it a beautiful computer and from the close-up shots on the big screens behind him, it’s hard to disagree. At the center is the Vera CPU, a square chip with 88 cores built on Arm designs customised by NVIDIA. Those cores can handle 176 threads at once, so they can juggle multiple streams of data without breaking a sweat. Flanking it are two Rubin GPUs, each one a monster made from two large silicon dies – the kind that stretch to the full size of the tools used to etch them at factories like TSMC. Each GPU has 288 gigabytes of high-bandwidth HBM4 memory, the fastest available, stacked in eight tall towers right next to the dies.

- System: AMD Ryzen 5 5500 3.6GHz 6 Cores | AMD B550 Chipset | 16GB DDR4 | 500GB PCIe Gen4 NVMe SSD | Windows 11 Home 64-bit

- Graphics: AMD Radeon RX 6400 4GB Graphics | 1x HDMI | 1x DisplayPort

- Connectivity: 5 x USB 3.1 | 4 x USB 2.0 | 1 x LAN 1G | WiFi 5 | Bluetooth 4.2 | 7.1 Channel Audio | Keyboard and Mouse

Around the edges are eight SOCAMM2 slots for LPDDR modules, layers of quick-access system memory that can be swapped out or expanded as needed. No cables hang off the sides; instead, five connectors hug the borders – two up top for NVLink switches that tie racks together, and three below for power, PCIe lanes and CXL hooks to share data across machines.The Rubin GPUs arrived in NVIDIA’s labs just weeks ago, stamped from Taiwan in late September. Their cooling plates match the footprint of the Blackwell chips but inside things get more complex. Each GPU breaks down into two compute sections, plus I/O helpers that manage the flood of signals. The Vera CPU shows its own clever assembly: faint lines across its surface hint at multiple chiplets pieced together, with an extra I/O block tucked nearby and odd green traces spilling from its pads – perhaps ties to hidden helpers underneath. This allows the whole package to link the CPU to its GPUs at 1.8 terabytes per second through NVLink-C2C paths, three separate brains into one.

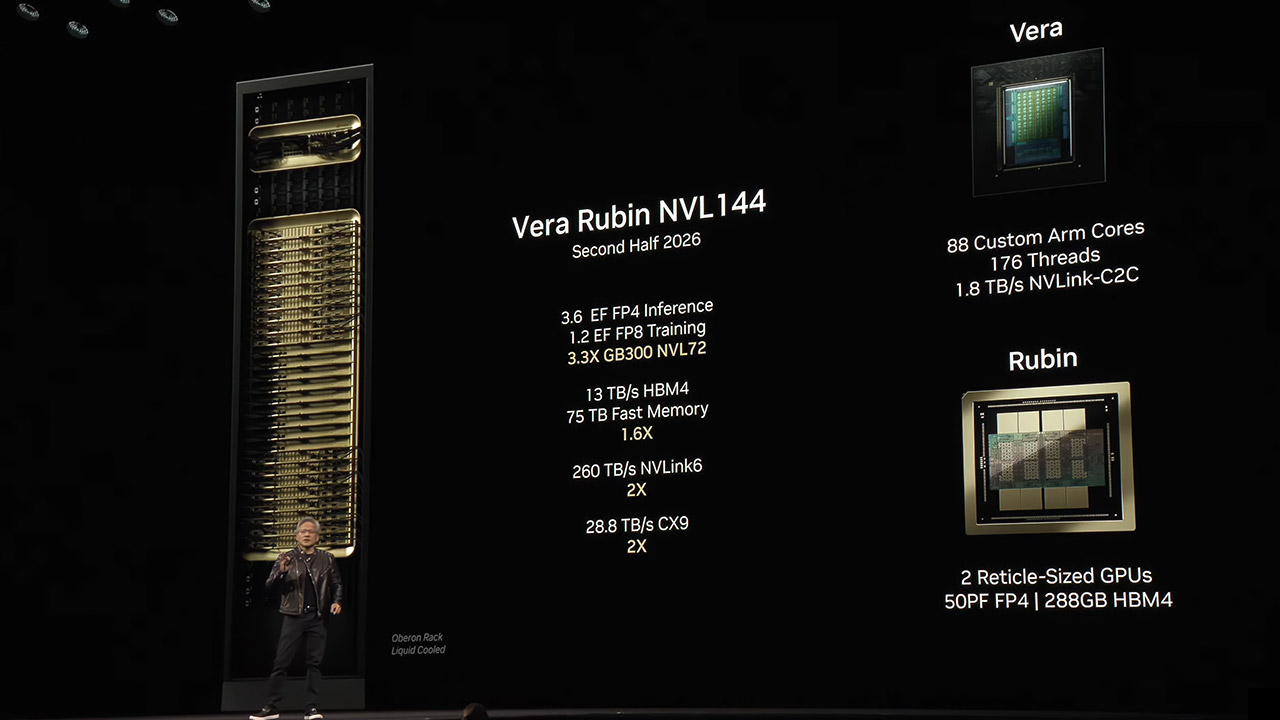

In a full rack, called NVL144, 144 of these GPU dies team up across trays that also pack eight ConnectX-9 network cards and a Bluefield-4 data processor to keep everything in sync. Huang noted the rack’s bandwidth is equivalent to a full second of global internet traffic, a scale that turns ordinary servers into something much bigger.

A single Vera Rubin system manages 100 petaflops of FP4 calculations, the kind that give AI pattern-matching a performance boost without sacrificing accuracy. When you scale that up to the NVL144 rack, however, things get really crazy – 3.6 exaflops for inference – the bit where models in your favourite chatbots or image generators actually try to figure out what they’re looking at – and 1.2 exaflops for FP8 training, where the system tries to learn something from a gazillion datasets. And get this – memory flows in at a blinding 13 terabytes per second thanks to the massive HBM4 stacks, with a whopping 75 terabytes of fast storage stashed away for emergencies. Then there’s the NVLink connections, which come in at an eye-popping 260 terabytes per second, and the CX9 lines aren’t too shabby either – 28.8 terabytes per second. Now, when you pit this against the GB300 Blackwell racks that are just starting to roll out, things become pretty clear – you’re looking at three times the raw speed, 60% more memory and double the bandwidth for ferrying data around the system. Early developers who’ve got their hands on the technology are already getting a good look at just how much it can cut down wait times on massive simulations – think drug discovery and climate modeling.

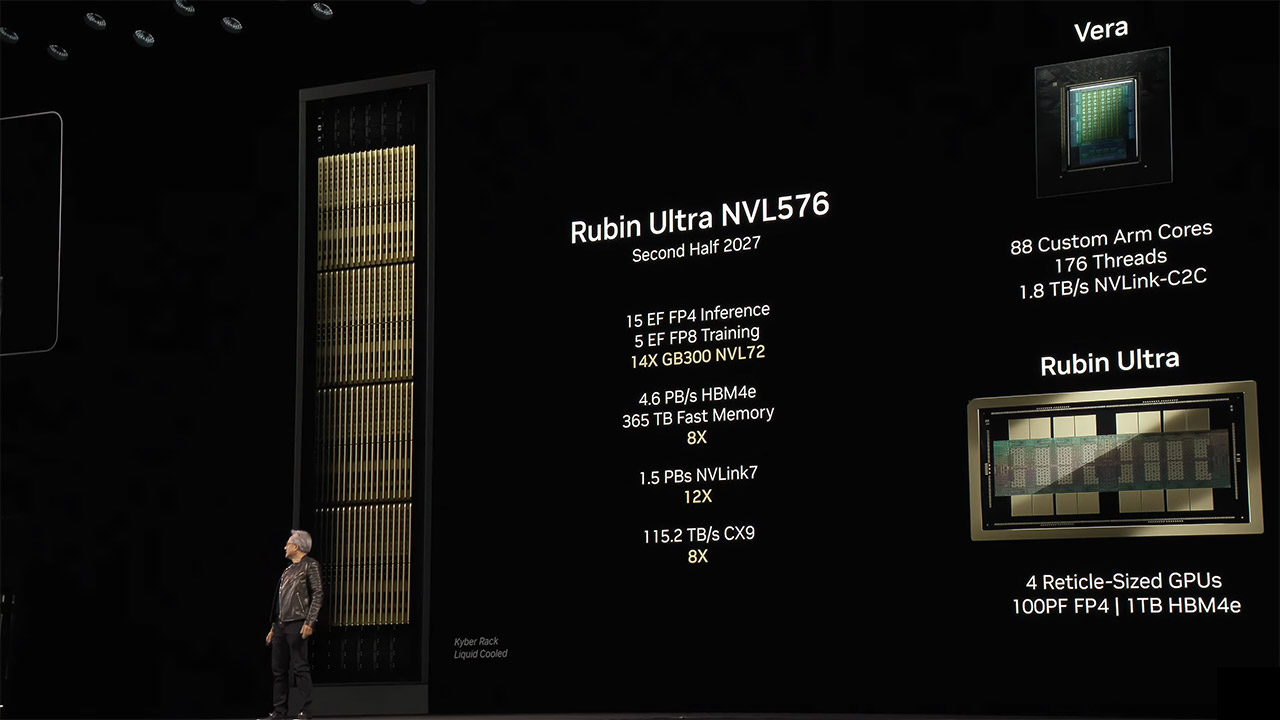

Mass production of Vera Rubin is set to kick-off sometime in the third or fourth quarter of 2026, around the same time as the Blackwell Ultra starts hitting the shelves. Then, in late 2027, comes Rubin Ultra, an NVL576 rack that puts four GPUs on each package instead of two – with a cool terabyte of HBM4e memory spread out over 16 stacks. It’s a big deal – we’re talking 15 exaflops in FP4 inference and 5 exaflops in FP8 training – that’s 14 times the power of today’s GB300 setups. And get this – memory bandwidth is an insane 4.6 petabytes per second, and total storage is a whoppin 365 terabytes. NVLink and CX9 connections are also seeing a serious boost, up to 1.5 petabytes per second and 115.2 terabytes per second respectively, which should let entire data centres run like a well-oiled machine. And hey, if you thought that was exciting, NVIDIA’s got even more tricks up its sleeve – including the Feynman chips that are supposed to arrive in 2028, running on even finer silicon processes than before.

[Source]