Maker Nick Maselli created a pretty decent laundry folding robot prototype in under 24 hours for a client who simply needed the job done. He named it Sourccey, and it’s essentially a movable, cylindrical box with a dome on top, two articulated arms, and a center vertical lift to access items. The majority of the structural components, from arm parts to outer enclosures, were created using a 3D printer that produced PLA filament. The fact that it can print so quickly allows them to swap items out throughout the build window.

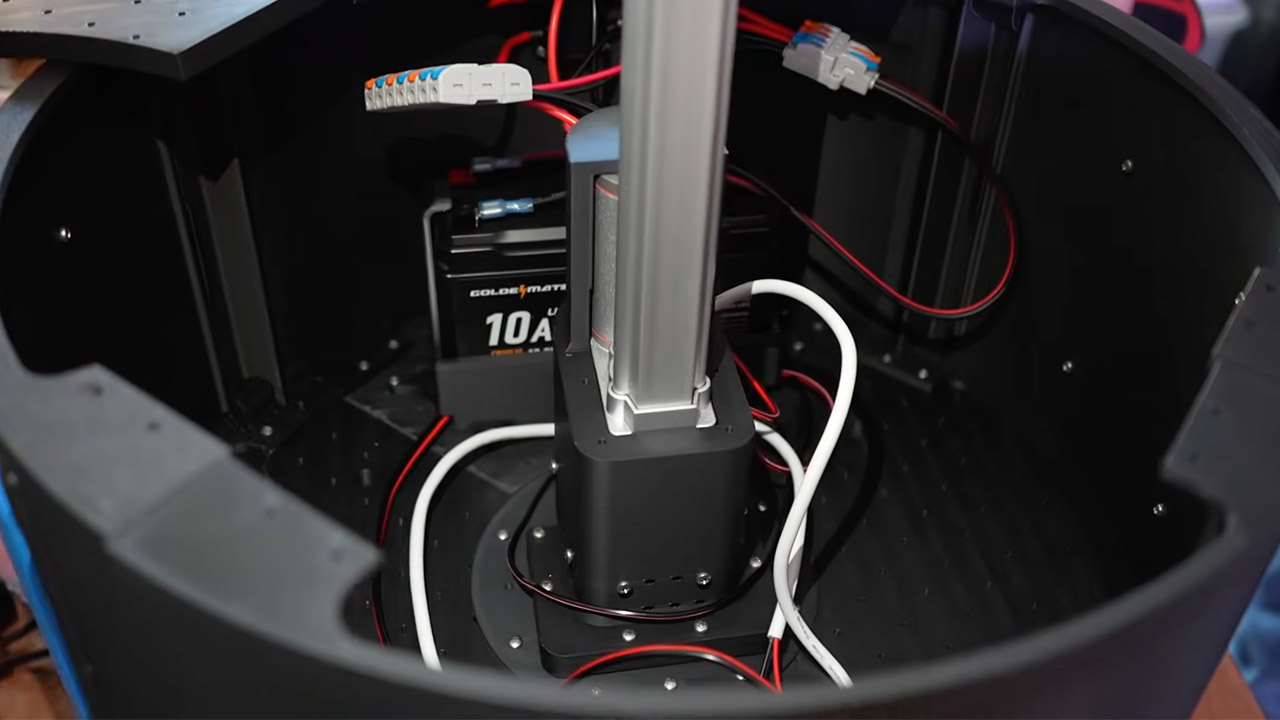

A Raspberry Pi 5 handles all computational tasks, including taking feeds from four cameras, controlling the motor drivers, running a display, speakers, and microphone, and overseeing the entire operation. Its power source is a 12 volt, 10 amp hour lithium iron phosphate battery, which was chosen for its safety and longevity. A custom power distribution board, combined with voltage converters, ensures that everything receives a constant supply without overloading.

- Height, width and thickness (standing): 1270x450x200mm Height, width and thickness (folded): 690x450x300mm Weight with battery: approx. 35kg

- Total freedom (joint motor): 23 Freedom of one leg: 6 Waist Freedom: 1 Freedom of one arm: 5

- Maximum knee torque: 90N.m Maximum arm load: 2kg Calf + thigh length: 0.6m Arm arm span: approx. 0.45m Extra large joint movement space Lumbar Z-axis...

To achieve proper folding, the arms use several servos for ultra precise, coordinated motion. A vertical Z-axis actuator runs through the center, allowing the arms to reach up and grab objects from the floor or a table. The grippers at the ends hold the fabric tight. Getting all of stuff sorted requires careful wiring and safety features like as fuses to keep everything stable.

The way it folds a towel or whatever it is avoids all the hard-coded stuff and instead relies entirely on artificial intelligence. A human demonstrates how to perform the move first, which serves as training data for the AI model, which then trains overnight on several powerful GPUs. Once it’s all done, the model is deployed back to the Raspberry Pi, and Sourccey can then do its thing independently: cameras spot a towel, work out where it is and what it looks like, grab it, and then execute the folding sequence it learned on the Pi, but here’s the thing, it’s not just tough on this one task, it can handle the natural give in fabric because it’s using vision to guide the whole process and learned patterns rather than just being told exactly what

The entire build took less than 24 hours, with the hardware assembled during the day and the AI training taking place at night. There were a few hiccups along the way, such as a missing motor clip that needed to be resoldered and a defective 3D-printed bit that needed to be reprinted, as well as a couple of team members calling it a day early, which cut into their time, but despite the rush, everything seemed to work fine and the prototype completed the task.

[Source]