Apple’s new ReALM (Reference Resolution As Language Modeling) AI model can not only understand ambiguous references on your iPhone’s screen, but also conversational and background context. This could potentially lead to more natural interactions with Siri.

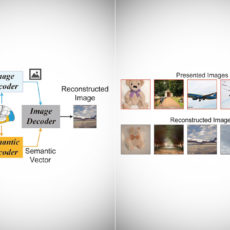

Think of ReALM as an AI model similar to that of OpenAI’s GPT-4, with its sole purpose to provide context to other AI models like Siri. This visual model basically reconstructs the screen and labels each on-screen entity as well as its location, thus generating a text-based representation of the visual layout that can be passed on to the voice assistant. When that happens, Siri is provided with context clues for user requests. ReALM could make its first appearance at WWDC 2024 in June when Apple unveils iOS 18, and possibly Siri 2.0.

- SUPERCHARGED BY M3 — With an 8-core CPU and 10-core GPU, the Apple M3 chip can help you blaze through everyday multitasking and take on pro projects...

- BUILT FOR APPLE INTELLIGENCE — Apple Intelligence helps you write, express yourself, and get things done effortlessly. It draws on your personal...

- UP TO 22 HOURS OF BATTERY LIFE — Go all day thanks to the power-efficient design of Apple silicon. The MacBook Pro laptop delivers the same...

We also benchmark against GPT-3.5 and GPT-4, with our smallest model achieving performance comparable to that of GPT-4, and our larger models substantially outperforming it,” said the researchers.

[Source]