Photo credit: Sreang Hok/Cornell University

Video used to be a window to reality, a reliable record of events. Now with deepfakes, that trust is crumbling. Anyone with a decent computer can create a video of world leaders saying things they never said or events that never happened. It’s getting worse and worse, but a team at Cornell has come up with a way to fight back. Their solution is called noise-coded illumination and it uses something as ordinary as light to watermark videos in a way that’s almost impossible to fake.

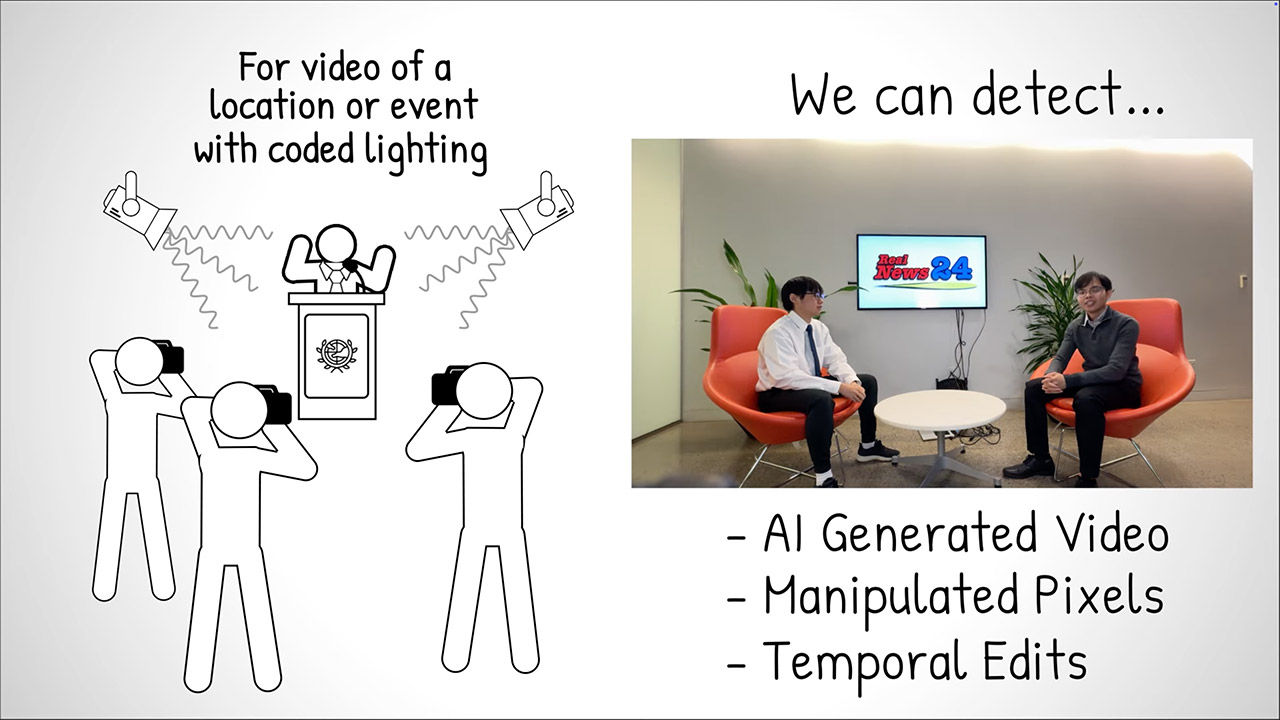

Cornell’s approach is based on a deceptively simple idea: make the light carry a secret. Led by Assistant Professor Abe Davis and graduate student Peter Michael, the team created a system where lights flicker in a specific pattern, too subtle for human eyes to notice. These fluctuations are called “noise” and act like a digital fingerprint. Any video recorded under this light captures the code, whether it’s shot with a smartphone, a professional camera or anything in between. The beauty is universality – no need for fancy equipment or cooperative filmmakers. If the light’s in the room, the watermark’s in the video.

- 【Leading AI Mini PC】MINISFORUM AI X1 Pro-370 Mini PC comes with AMD Ryzen AI 9 HX 370 processor, which uses AMD's latest generation Zen 5...

- 【AMD Radeon 890M Graphics】The X1 Pro Micro Computer equipped with AMD Radeon 890M Graphics which built on the new generation of RDNA 3.5...

- 【Support Copilot】This Mini PC supports Copilot. Copilot is an AI companion that works anywhere and intelligently adapts to your needs, helps you...

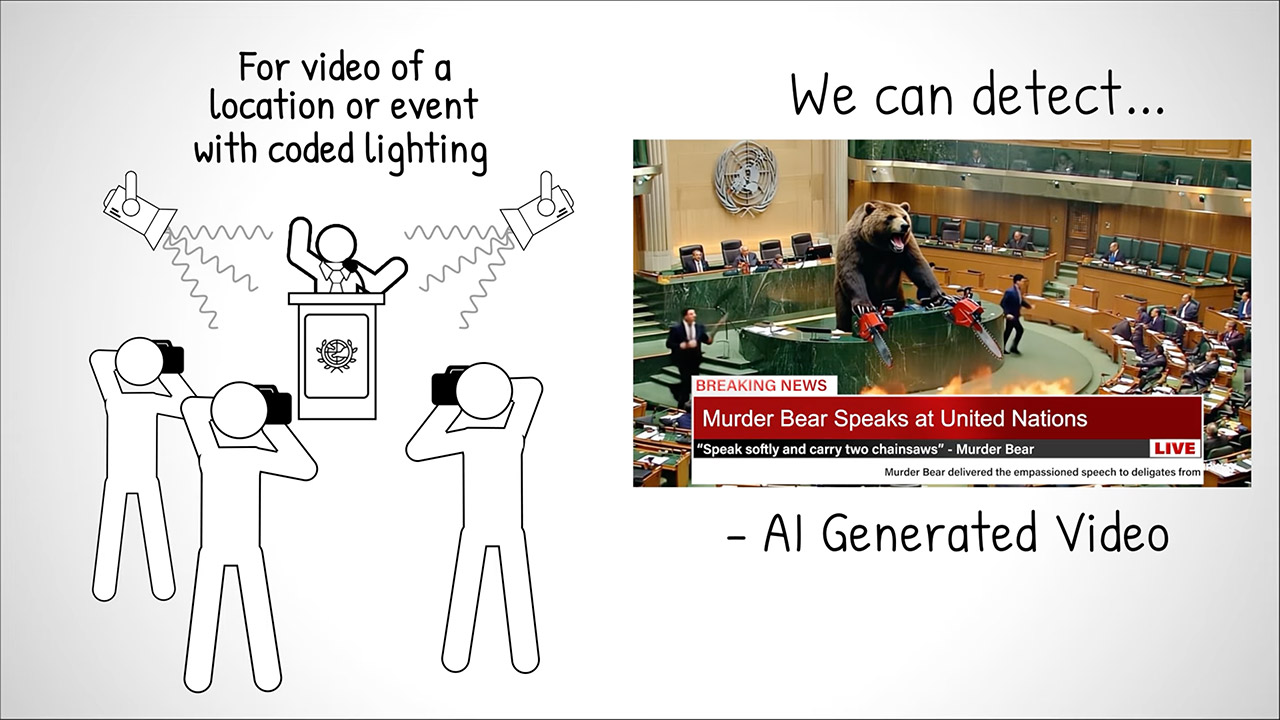

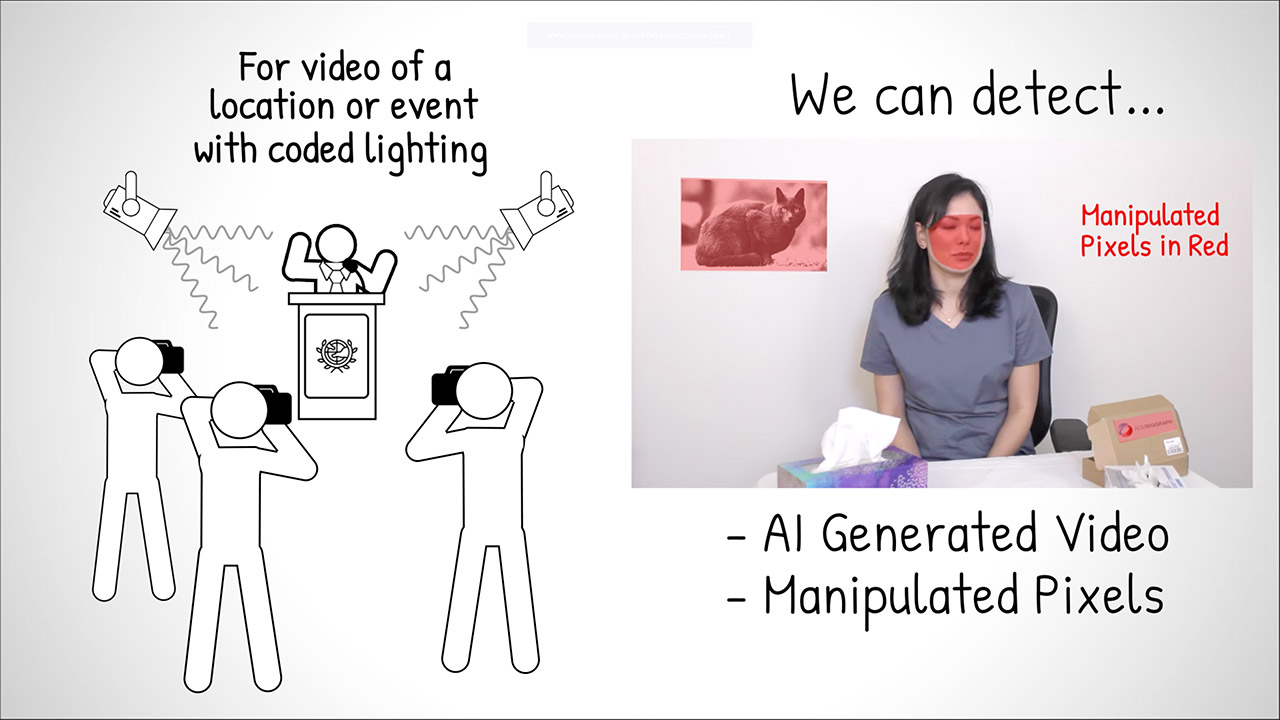

How does it work? Imagine a press conference or an interview lit by a few lamps or even a computer screen. Those light sources are programmed to pulse ever so slightly, following a unique code. This flicker is so faint it blends into the natural variations of light we already ignore. The code embeds a low-res, time-stamped version of the video itself, like a shadow recording that lives in the light. When a forensic analyst with the right key examines the footage, they can extract this shadow version and compare it to the main video. If they match, the video’s legit. If someone’s tampered with it – say, by editing out a key moment or swapping in a fake face – the shadow version will show glitches, blacked out sections or just random noise.

Modern setups like smart bulbs or computer screens can be programmed with a bit of software. Older lamps? Slap on a chip the size of a postage stamp and they’re good to go. Even better, you can layer multiple codes in the same scene. Imagine three lights, each pulsing with its own unique pattern. A forger would have to crack and replicate every single one and those fakes would have to align perfectly with each other.

The team’s work will be presented at SIGGRAPH 2025 in Vancouver, proving they’ve tested it in real-world settings, from indoor interviews to outdoor scenes, and across different skin tones to make sure it holds up. The results show the codes are detectable and tampering stands out like a sore thumb. For example, if someone cuts out a chunk of a political speech the shadow video will show a glaring gap. If they try to generate a fake video from scratch using AI the code will just not produce a coherent shadow exposing the fraud.

Davis and Michael are upfront about the arms race against misinformation. As soon as a new defense like this rolls out the bad actors start scheming to break it. Someone could try to mimic the light’s flicker but they’d have to know the exact codes and replicate them across every light source in the scene—a tall order. Plus the system’s design leans on human perception research to keep the flicker imperceptible so it’s hard to even know it’s there.

[Source]