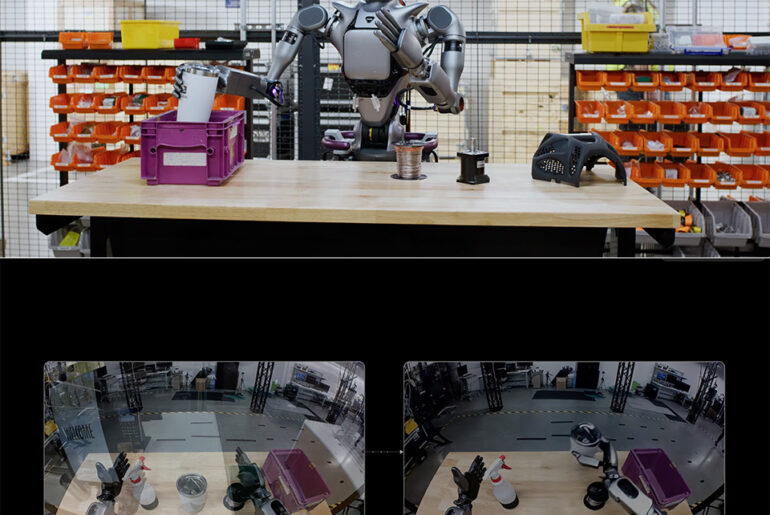

Robots learning like humans do—through dreams? NVIDIA’s latest breakthrough, the Isaac GR00T-Dreams blueprint, aims to make it a reality. Unveiled at Computex 2025, this generative AI system crafts synthetic data to train autonomous robots.

“The Isaac GR00T-Dreams blueprint is a reference workflow for generating vast amounts of synthetic trajectory data,” explain NVIDIA researchers Oyindamola Omotuyi, Spencer Huang, Kalyan Meher Vadrevu, and Dennis Lynch in a joint announcement. This data, dubbed “neural trajectories,” teaches robots to perform new actions in novel environments, bypassing the slow grind of collecting real-world data.

- Transform your reality with Meta Quest 3S 128GB. Now get the Amazon-exclusive Cardboard Hero Bundle, which includes the Handiwork Helmet, Handiwork...

- Turn any room into your own personal theater. Dim the space around you and watch on a giant, vibrant screen. Go all in with USB-C headphones, or plug...

- Have more fun with friends in Quest. Whether you’re stepping into an immersive game with people from around the world, watching a live concert...

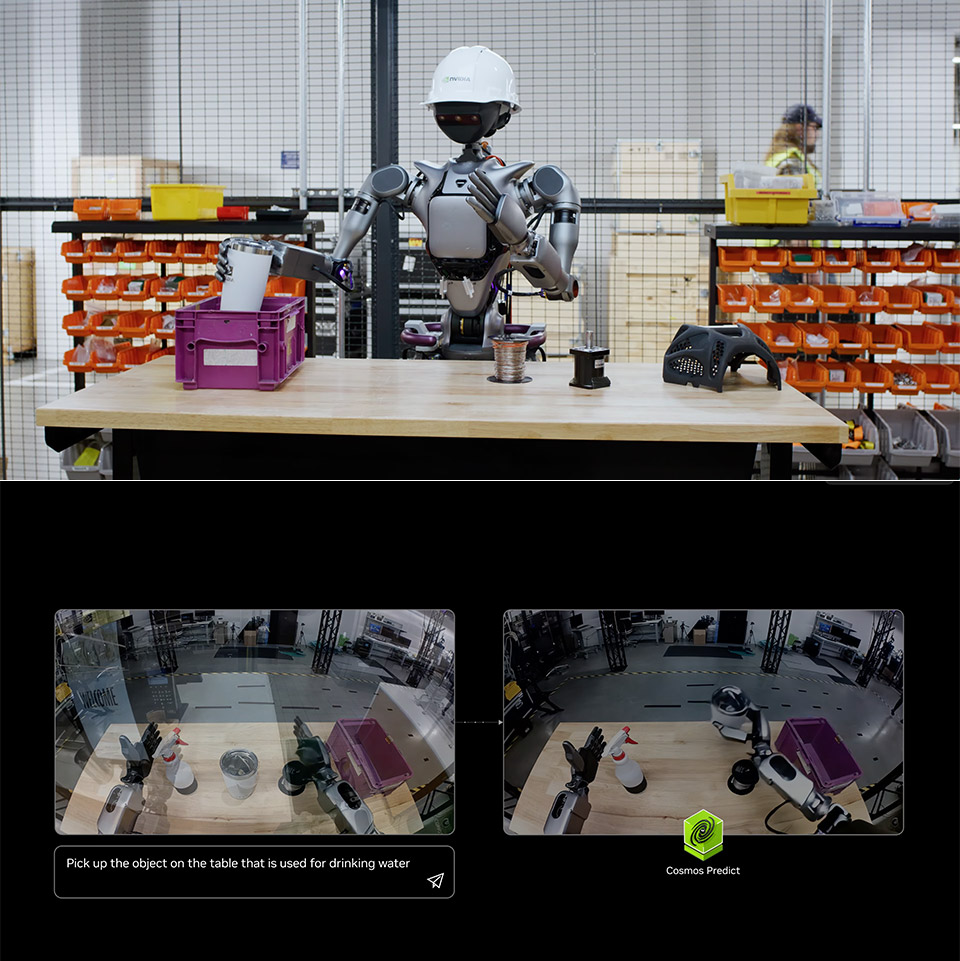

The process relies on NVIDIA’s Cosmos platform, specifically the Cosmos Predict-2 physical AI model and the Cosmos-Reason1 multimodal model. These work together to transform raw inputs into actionable data. Cosmos Predict-2 generates video simulations of a robot performing the task, while Cosmos-Reason1 filters out impractical or awkward moves. “The blueprint extracts action tokens—compressed pieces of data easily processed by the robot’s neural network,” NVIDIA’s team notes.

These neural trajectories are essentially 2D videos enriched with 3D action data, creating a large-scale synthetic dataset. Robots can use this dataset alone or combine it with real-world data to boost performance when training. NVIDIA’s research team claims this approach trimmed training time for their previous GR00T model from three months of live motion capture to just 36 hours. That’s a seismic shift in efficiency.

What’s particularly compelling is how GR00T-Dreams sidesteps the data bottleneck plaguing robotics. Gathering and labeling real-world data is a slog—humans must perform tasks repeatedly, often under controlled conditions, to create usable datasets. “Real-world robots can only collect a limited amount of data each day,” NVIDIA’s researchers point out, “but with GR00T-Dreams, developers can generate as much training data as needed.” By leaning on synthetic data, the blueprint frees developers from endless motion capture sessions, letting robots learn faster and adapt to dynamic settings.

GR00T-Dreams enables robots to learn from minimal human input. Companies like Neura Robotics and Vorwerk are already tapping into this tech, using GR00T-Mimic to post-train models with custom synthetic data. “The GR00T-Dreams blueprint unlocks advanced capabilities for robot learning, including new behaviors, new environments, and more,” NVIDIA’s technical blog emphasizes.

[Source]