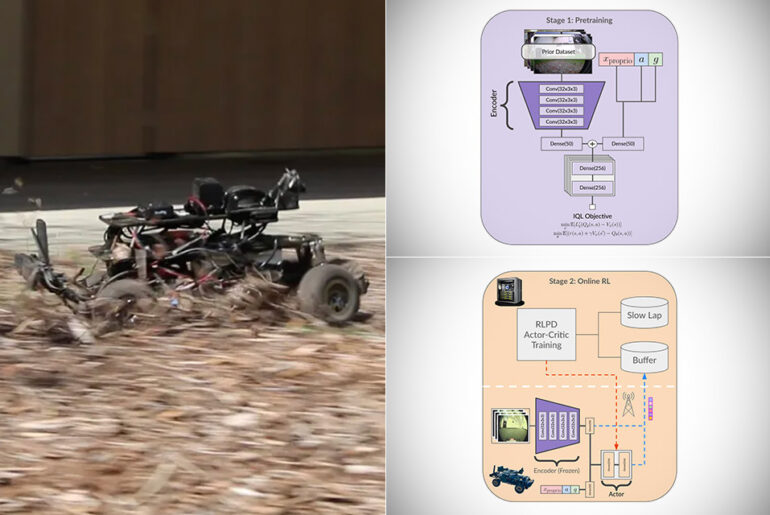

UC Berkley researchers have developed FastRLAP, a system that teaches robot cars to drive fast autonomously. They used sample-efficient reinforcement learning, alongside a task-relevant pre-training objective and an autonomous practicing framework, to teach the car high-speed driving policies in the real world with as little of 20 minutes of interactions.

The method consists of two phases: Pretrain and Autonomous Practicing with Online Reinforcement Learning. Pretrain uses offline reinforcement learning (IQL) to extract a diverse offline dataset collected on a different robot, using a similar task objective, while APORL applies recent sample-efficient online reinforcement learning technique that make use of a small amount of prior data to learn a policy for fast driving in real time. In other words, the first pre-training stage has you manually driving the robot to avoid obstacles and the second lets you place it on a track, showing the car where to go before it begins learning it autonomously.

- Power & Performance: With a Nominal 250W motor (Max. Power 500W) and long-lasting battery, travel up to 11.2 Miles and reach a top speed of 15.5MPH.

- User-Friendly Design: Intuitive controls and a lightweight, foldable frame make this scooter easy to use and store.

- Cutting-Edge Features: The Segway D18W Electric Scooter comes with LED lighting, Weight capacity 220lbs and more to enhance riding experience.

This environment consists of a large-scale (120 meter loop) outdoor course between a dense grove of trees on one side and a tree and several fallen logs on the other side. A successful policy must navigate between the trees and logs, a difficult task at high speeds. Additionally, the ground near the trees is covered in leaves, sticks, and other loose material, causing complex dynamics including highly speed-dependent over/understeer,” said the researchers.