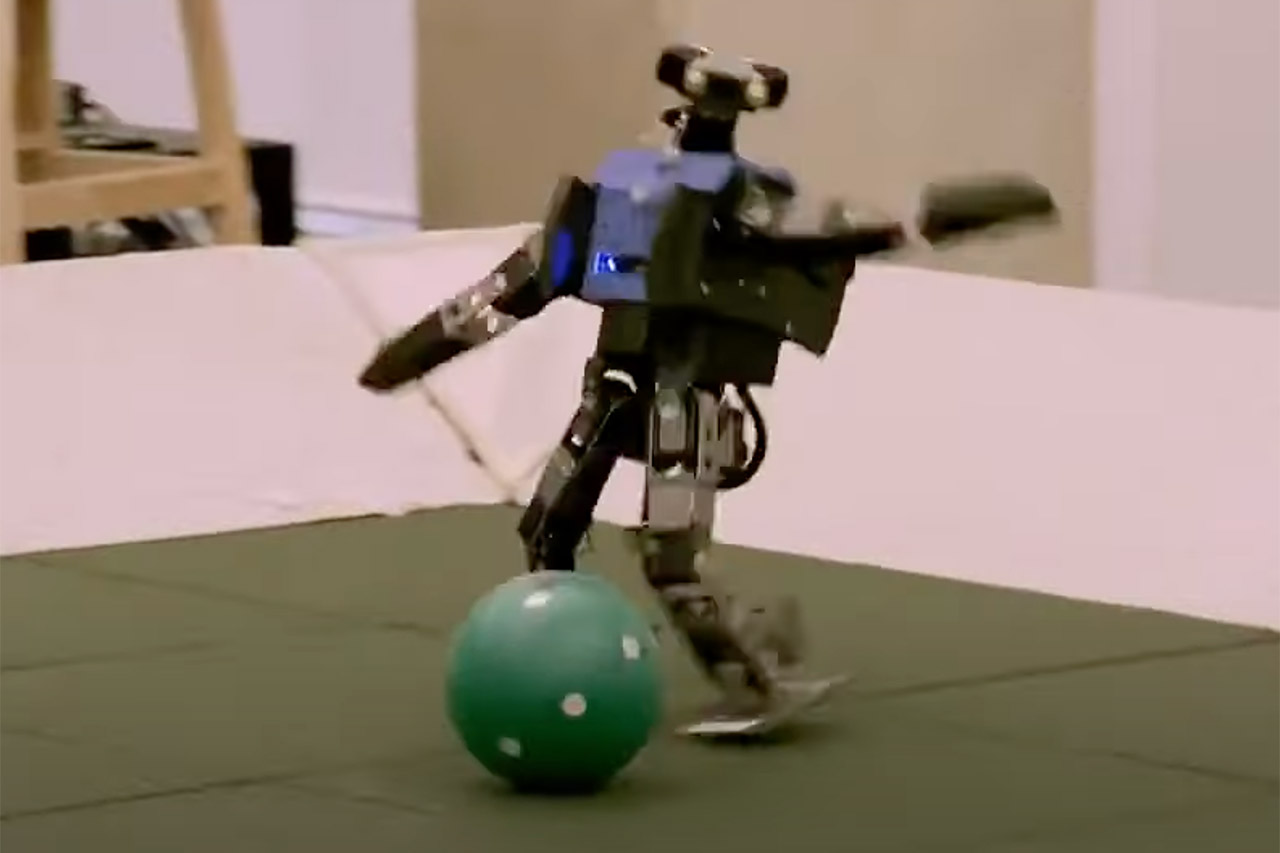

Engineers connected humanoid robots to Google DeepMind AI and watched the group teach themselves how to play a game of soccer (football). The challenge for AI is that it has to recreate everything human players do, even things they don’t consciously think about such as how to move each limb and muscle with precision in order to actually kick a moving ball.

Timing and control required for even the smallest of movements can be very tricky for AI to perfect. DeepMind had to model these robots on real humans, with multiple points of articulation and a constrained range of motion. They started with simple goals like running before moving on to kicking a ball. Next, the robots were left to see if they can try and figure out how to get through the reinforcement learning stage.

- Google Tensor G2 Processor: Faster and more efficient than previous Pixel models

- 5G Connectivity: Allows you to take advantage of faster data speeds on major carriers

- Adaptive Battery: Can last up to 24 hours on a single charge with extreme battery saver mode

In order to ‘solve’ soccer, you have to actually solve lots of open problems on the path to artificial general intelligence [AGI]. There’s controlling the full humanoid body, coordination—which is really tough for AGI—and actually mastering both low-level motor control and things like long-term planning,” said Guy Lever, DeepMind Research Scientist.